Cathy O’Neil’s now infamous book, Weapons of Math Destruction, talks about the pernicious feedback loop that can result from contentious “predictive policing” AI. She warns that the models at the heart of this technology can sometimes reflect damaging historical biases learned from police records that are used as training data.

For example, it is perfectly possible for a neighborhood to have a higher number of recorded arrests due to past aggressive or racist policing policies, rather than a particularly high instance of crime. But the unthinking algorithm doesn’t recognize this untold story and will blindly forge ahead, predicting the future will mirror the past and recommending the deployment more police to these “hotspot” areas.

Naturally, the police then make more arrests on these sites, and the net result is that the algorithm receives data that makes its association to grow even stronger.

O’Neill has observed that if police were to routinely search the offices of Wall Street bankers or the homes of celebrities, arrest rates would likely rise amongst these classes and neighborhoods. Such information would then be fed in as data, and eventually begin to reshape the system’s predictions. But even then, this data wouldn’t reflect an accurate picture of all crime. It would only map the scant category of “arrests made by police”.

In order to be truly accurate, the model would have to have all of the information about all crime occurring. In which case it would need to be collected by an omnipresent being…

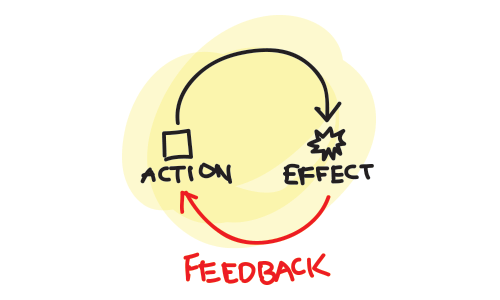

So, it’s pretty clear that our current systems are challenged at best. And one of the huge problems for any similar AI – whether its predicting if someone will pay back a loan, make a good employee, or commit a crime based on their zip code – is that, while it will receive feedback on its successes, it is less likely to find out when it get things wrong. If an applicant doesn’t get the job (even though they would’ve been great), or the credit (despite the fact they were good for it) or commit a crime (because they are a paragon of virtue), there is still no direct mechanism to feed that back. The system never gets to learn from its failures.

This is not a secret problem. AI developers are painfully aware that there is currently no straightforward way to combat biased data or solicit full feedback. However, increasingly they are making efforts to harvest this critical information wherever and however they can. Not just in the name of ethics, but also to ensure that their creations perform as accurately as possible.

At a recent event on artificially intelligent systems in San Francisco, one such developer was asked to describe exactly how he seeks this kind of feedback for his HR chatbot, which engages with job applicants at the beginning of the hiring process. How could he ever know if his bot’s manner or language deterred perfectly good candidates from moving forward with their application? His answer – though inventive and well-meaning – strikes right at the heart of why this is such a hard ethical nut to crack. He explained that his company regularly asked Amazon’s Mechanical Turk survey respondents to determine whether a range of bot behaviors were acceptable or not, and then used this information to fine tune the bot.

So, what’s the problem with this seemingly reasonable attempt to tackle a difficult problem? Well, I think (at minimum) two things.

The first, is that people aren’t usually acutely aware of why they behave in certain ways, and can often give woefully inaccurate predictions of how they would really react to something “in the wild.” We might report to a survey that we’d take something “well” or “badly”, but psychology has shown us time and time again that we don’t know ourselves quite as well as we think.

The second problem, is that the Mechanical Turk approach assumes something about ethics that philosophers have been tangoing with for millennia – namely, that it can be crowdsourced. Just because a critical mass of people think that a certain behavior is morally permissible, does not necessarily mean we can confer that quality. For an obvious example, look at the wave of support Adolf Hitler secured in war time Germany. Indeed, even some of the greatest minds have struggled with the idea that because something is a certain way, this means that this is how it ought to be. And determining the latter is rather less straightforward than Silicon Valley might hope.

So here is the issue: we want to use and improve these systems, but it’s difficult to find a way forward. A way that allows AI to directly learn about its failures and/or what it misses. We are not there yet, and we don’t seem to be getting too much closer. Even with the “space race” that is development of the driverless car, the ultimate ethical hot potato.

This could well be an insoluble problem, destined to thwart tech for the coming century. It is certainly something to track and challenge wherever – as in this instance – the answers are presumed to be too easy.

Pingback: Feedback To Move FORWARD – Ask The Educator